Looking back, for most of my 3 years of product-building experience I was building applications for people who want to build their own solutions. The latest advances in AI and its ability to generate code can empower people to build tools in ways that can't be done before.

That's why I am embarking on a long journey to build a better application builder for people who are not technical, leveraging the latest advances in AI. I want to start explain why this becomes the problem I want to engage in the long term.

Why do I want to do this?

Before I became technical, I was a recruiter for teachers. Back then I want to build an application portal for teachers to apply to schools. It would save so much of my repetitive work and make the job-searching experience 10x better.

Most off-the-shelf solutions are catered to traditional HR, not teachers. So I wanted to build one myself. But I gave up before I can build any screens that remotely have any functions. The learning curve of coding back then was too steep for me.

2 years later, when I started designing products for enterprises, I see the same thing with companies. They want technology to automate their processes or solve their bottlenecks, but they just don’t know where to start. Lucky for them, they hired us, and we had the tools to build those technologies for them.

But there was a lot of back-and-forth between us and them. They are deeply engaged and knowledgeable about their problem space, so it was hard for us, armed with only general technical knowledge, to envision how it would help them to tackle their problems.

After 2 years of building products for organizations that safeguard our freedom, stop pandemics from happening, or make cargo arrive on time worldwide, I think the people working in these organizations should make their own tools. In fact, the easier for every to make their own tools, the more effective our society can be.

Instead of building products used by millions of people, I am much more interested in helping 100 organizations build their own tools that each impact millions of people with their reach.

I want to help people deep in their problem space build technology solutions that solve some of their problems

Why now?

I started designing tools for builders / impactful organizations in 2020. But I believe this is a critical inflection point for tools that help people build.

Technological tools, at the end of the day, have to interface with computers in the form of code. Even though we saw coding language get more abstracted over the years, with more natural language concepts and semantics added to it, I am convicted that code will still be around to instruct computers for many years to come. Tools that leverage technology, at some level beneath the user interface, still runs on code.

And when I mean by code, I mean text-based instructions written in a very rigid logic, syntax that is used to instruct computers to do simple, yet repetitive tasks.

But with the rise of Generative AI, code becomes much more easy to write. Or code can become much more abstracted, much more natural language-like, more less rigid.

There were attempts in the past to make coding more like writing, but they still have their own rigid logic and syntax that the writing needs to be abiding. It is more like replacing computer instruction concepts, such as conditionals, loops, and objects, with written language terms.

For the first time, we can write reliable (to a certain extent) and scalable code with natural language. We can input a request in our own words, and an LLM (mostly GPT) can spit out accurate code to a certain extent.

Rather than retaining the rigid logic and syntactic structure of programming languages, LLM becomes a compiler for us to convert more free-flow, natural language instructions to code, which runs technology tools.

This represents a huge paradigm shift in coding, and building technology products at large. But there are some challenges with coding solely using LLMs:

LLM hallucinates on code, and coding requires an extreme degree of accuracy. Unlike normal chat when any hallucinations alone would not discount the value of the generation, any little hallucination may break the whole program.

- Code interpreter that gives LLM a code-error-fix loop is a good way to solve this problem

LLM finds it hard to understand relationships between entities if they never existed in other scenarios. For a computer program, dependencies are a huge part and LLM needs to understand the connections between different parts of the program and reuse/reference different parts when necessary.

- There are some attempts to solve this problem — having a very large context window, digesting the codebase using context or embeddings, asking LLM to update a list of shared dependencies as it generates more complex code

These two challenges combine pose a bottleneck for LLM to build complex technology tools by itself. That’s why most generative code products that work focus on 1) single functionality generation (either a function or a page) or 2) fixing code (essentially understanding the codebase and rewriting a function or a page based on an error context)

I am not sure what I will build will circumnavigate these challenges. But it is always worth a try to find solutions to these problems.

The paradigm of app builders

Before talking about app builders, let’s define what I mean by applications. Application is defined by its dynamism, compared to other digital artifacts (e.g. documents, multimedia), it is…

Automatic: it can be run itself, without the intervention of a person

Replicable workflow: It can be run reliably many times

Adaptable and mutable: applications adapt to different inputs and produce different outputs (sometimes inputs and outputs follow a certain structure, i.e. schema)

Seamless integration with other services: using API or connectors to fetch information from and send information to external services (even embeds can be a way to integrate)

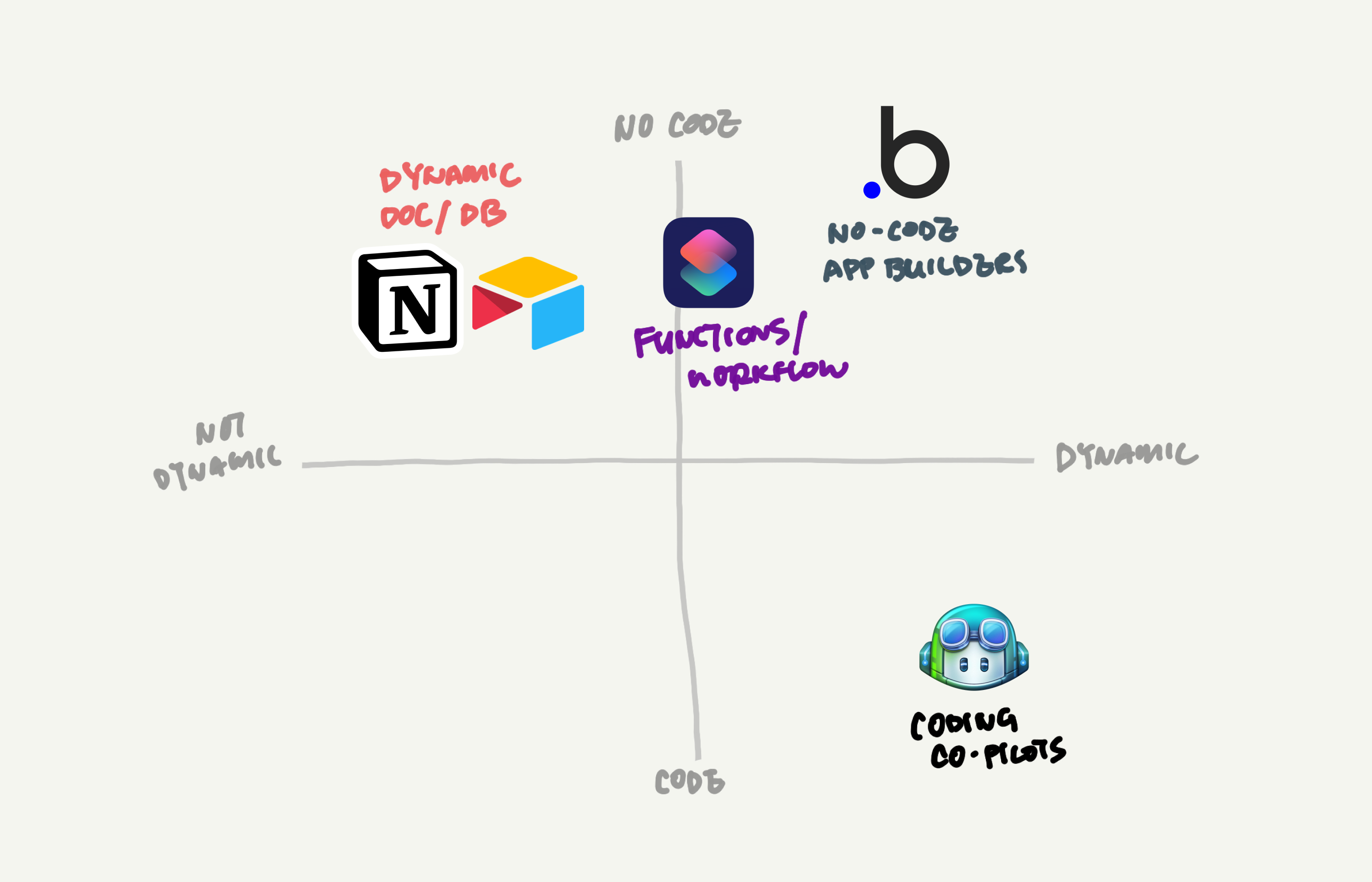

Back to app building, from what I am seeing, there are four paradigms of app builders in the market, differentiated by how much code builder has to interact with, and how dynamic the applications that builders can build are.

On one end, we have coding copilots or agents. They write code, ranging from a single function with Copilots, to complete applications with GPT-engineer.

Next, we have no-code app builders. They provide a graphical interface for builders to build complete applications, whether it is a canvas where we can drag-and-drop UI components or a flow-based interface.

Further down the dynamism and code spectrum, we have function/workflow builders. Instead of building completely independent applications, these focus on automating certain workflows or mini-programs on top of a larger system. For example, Workflows on iOS or integrations on IFTTT (if this then that).

Finally, we have dynamic documents or databases, popularized by Notion and Airtable. They are applications because the document morph based on views (e.g. timeline vs Kanban board), they allow updates to the underlying data, and they can perform very constrained programs and integrate with external services (also in a very constrained way).

I want to help builders build complete, end-to-end applications, without learning coding. These are the gaps in the current paradigms for this purpose:

For coding copilots and agents, builders still have to touch code. Non-technical builders would still have to learn how to code in order to utilize these coding assists

For no-code app builders, either start from a blank slate, which intimidates first-time builders

For no-code app builders, often times have their convoluted configuration system, which represents a steep learning curve for builders

Functions and dynamic documents just can’t support complete, end-to-end application building because they have to live on another platform

Based on these gaps, I want to present my vision for an AI-powered tool for building technological tools and applications.

What I am building

A no-code app builder that takes your user flow and generates a complete application that you can then tweak and modify to your liking.

There are a few key things that differentiate what I am building from other application builder in the market:

No-code: the builder does not need to interact with code when building the application

Never a blank slate: we will assist the builder to build at each step, and will not just present them with a blank slate and ask them to fill that in

Based on the builder’s existing mental models: instead of asking builders to adapt to a convoluted mental model of app building, we use concepts that are intuitive when people think about an application, such as steps, actions, visualization of information, etc.

Customizable: once the screens and logic at the backend are created, builders should be empowered to modify them to their liking

What made all these principles possible is the latest advancement in LLM that understands purpose and context, and can generate accurate code based on that understanding.

LLM can generate the starting point of a UI based on what builders want each step to do in the application. Then it can create the backend logic and database needed to support the front end, and ultimately the steps that the user described.

Through the builder’s re-prompting, it can modify itself to fit the builder’s vision. In the end, while the builder’s experience is purely no-code, the artifact is a maintainable codebase that can be deployed anywhere and can be further customized using code.

Questions I am thinking about

There are many threads about AI coding, mostly centered around…

The difficulty to use code generation in the production environment for general cases, not just a demo with very constrained, almost hard-coded use case

The time it takes to debug AI-generated code, which might be more than if humans write and debug their own code

I am at the very beginning of this journey to leverage generative code to build something useful for general tool-building purposes. So I have many questions, both about the generative technologies themselves, and what an AI-powered tool builder would look like.

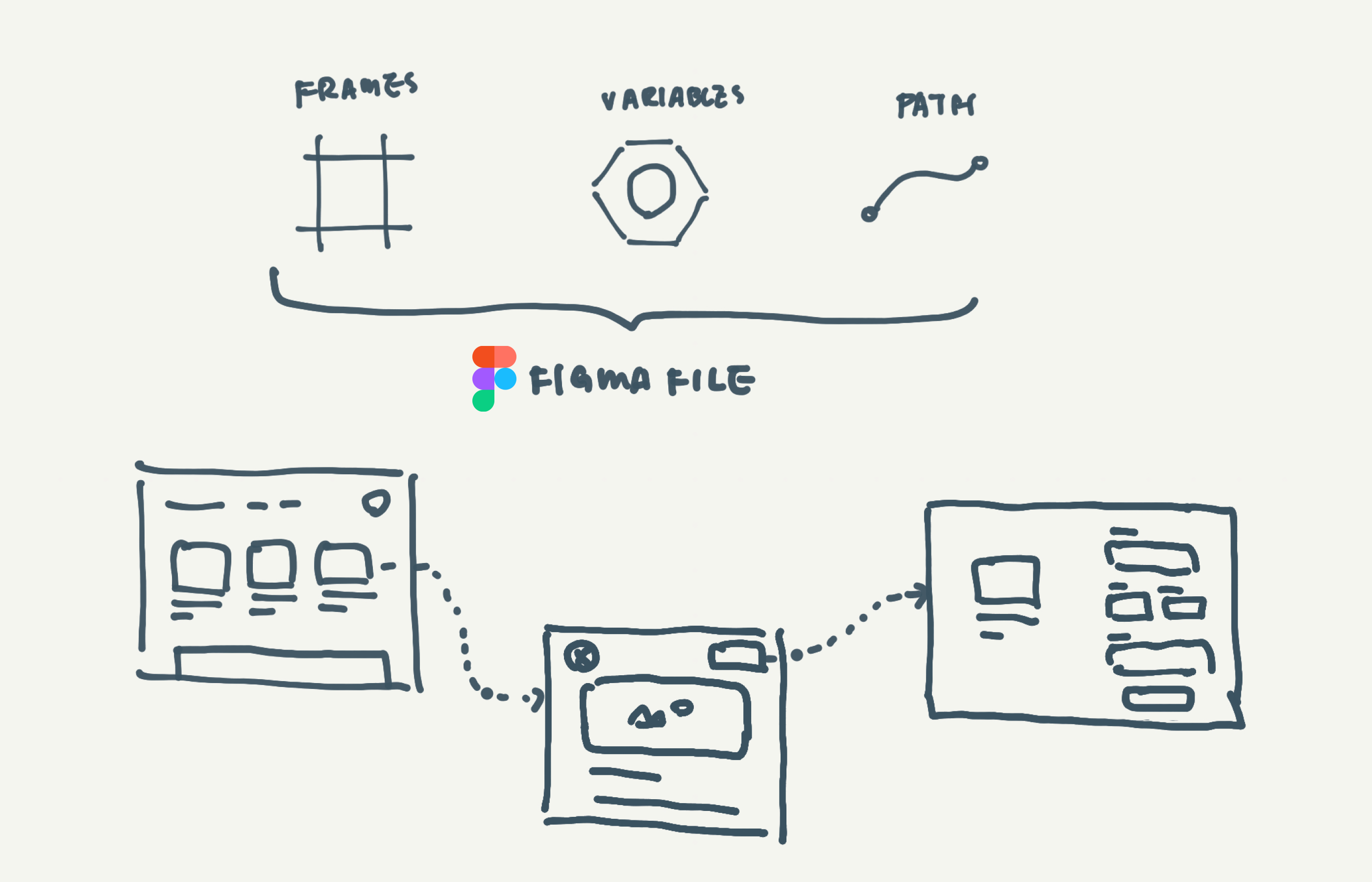

What is the artifact, or end product, of an application? Is it code, or is it higher-level, more abstracted artifacts, like flows, UI components, screens, etc?

What are the mental models and primitives builders will have to interact with? How do these primitives interface with the GenAI? What are some primitives that are required to build an application, but not necessarily exposed to the user?

How open-ended should this tool be? Can people build any applications they want with this? Or do I want to put some constraints on what they can build with this? What are the constraints I need to put in place?

Where should AI play a part in the whole app-building process? Should AI even be used? or should it just be a drag-and-drop, connect the flows app builder?

A great example of this that I keep going back to is Figma’s primitives. Figma is mainly built upon three primitives: Frames, Paths, and Variables (newly added). Yet with these three simple primitives, people can build all sorts of cool things. They are easy to understand, but greatly extendable for various cases.

If an AI-powered tool builder wants to be like Figma, what are the mental models, primitives, UX, AI interaction look like?

Next steps

Taking on this big problem by myself is daunting, yet exciting. There are many moving parts to this and even more questions and challenges I will have to confront.

Ultimately, there are a few things I am convicted on:

Teaching people how to fish is always more impactful than giving them fish; Empowering people to build is always better than building for them

Code is still going to run our technology, but there are better, more abstract ways to think about, write, and reason with code that will be more friendly to non-technicals

Great UX that respects people’s existing mental models will be the biggest differentiation for most AI products

I am building small experiments to explore this problem space. This writing serves as both my public accountability to this problem space and an invitation to ideas and collaboration to experiment with this space. Follow my Twitter @eugenechantk and this blog for my updates.

Time to get back to building!